6.2011 – 10.2015

Microsoft Surface Touch Covers

MY ROLE

I was responsible for the experience of the Surface Touch Covers products and next-generation incubations. My focus was on the pressure-based typing experience including the keyset gestures, touchpad, legend layouts, backlighting behavior, and tablet interaction.

THE CONTEXT

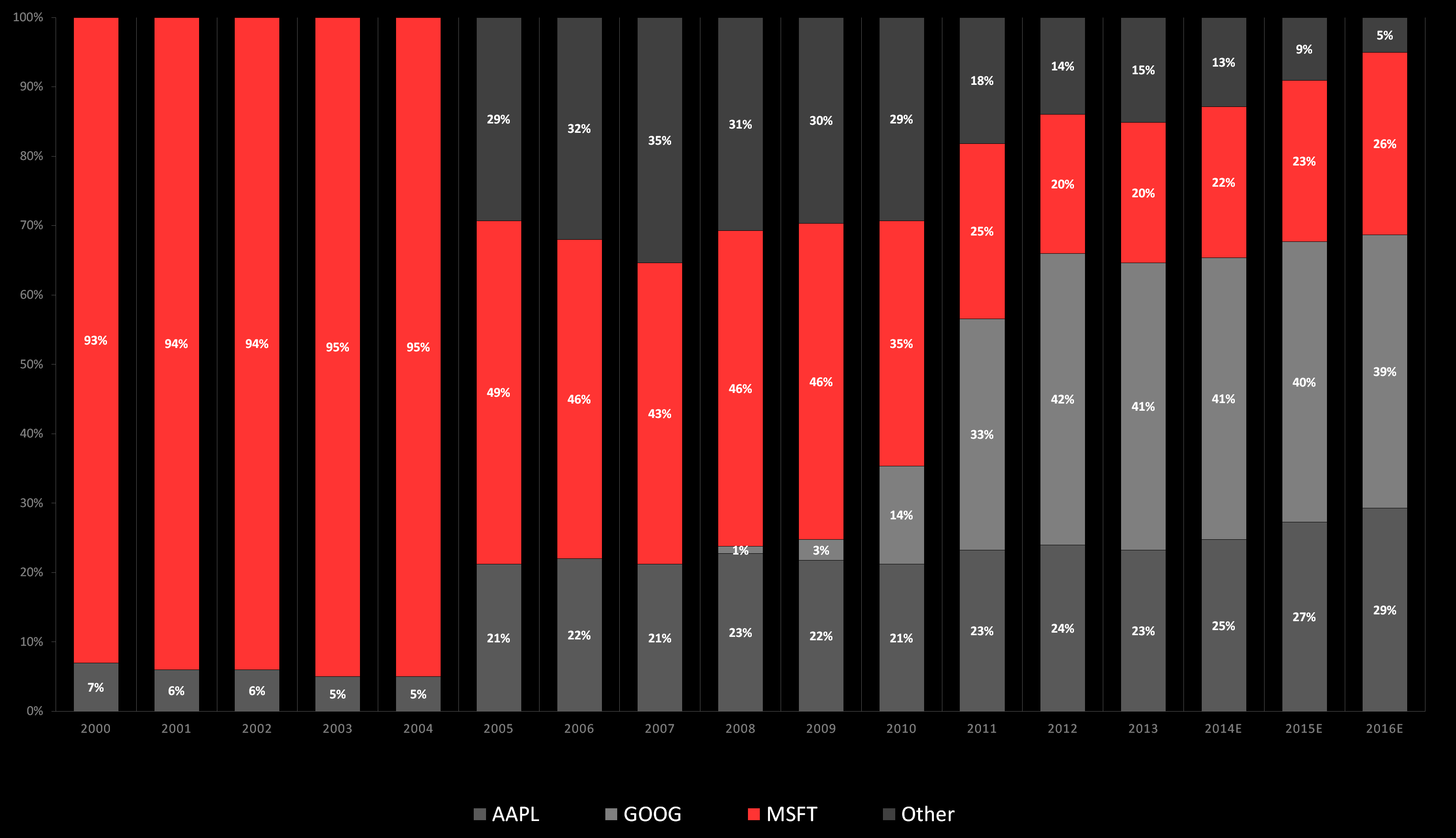

In 2005 Microsoft’s domination of personal devices was disrupted by Apple and Google. To reenvision itself, Microsoft kicked off the Surface business to influence the complacent, overly cost-focused PC manufacturers. It was an amazing opportunity, and one which I had specifically joined Microsoft Hardware for in 2003.

Market share of consumer compute 2000 – 2016

THE AUDIENCE

Tablet users wanting an experience more like a laptop.

MY GOALS

- Enable a super thin typing experience that’s better than the onscreen keyboard

- Establish Surface as a leading brand, challenging PC manufactures to up their game

- Showcase the Windows 8 touch experience before OEMs

MY APPROACH

I started with the Applied Science team’s early pressure-sensing prototypes. The typing experience was rudimentary and promised the possibility of incredibly thin keyboards. Manufacturing cost, development complexity, and risk, however, drove the product team towards a simpler hardware architecture. Everything prototyped was to be redesigned.

Leverage the thin, high sampling, low cost, pressure sensing architecture which Microsoft’s Applied Science group invented to create the thinnest tablet keyboard cover for Surface. Use pressure sensing to reliably disambiguate intent (between resting, typing and gesturing fingers). Make it snap in effortlessly, helping you do more with your tablet. Make the cover feel like a book when it is held closed over the screen. Make the cover’s connection robust enough to stay in place as it takes abuse by you or your bag, yet easy enough for a child to tear off. Integrate it with the system so well that it becomes something you don’t think about or have to manage as you reorient and use it however and wherever you like.

For the first generation Surface Touch Cover, manufacturing cost, development complexity and risk drove the implementation to a simpler, cheaper architecture. These changes were big. Practically everything prototyped was to be redesigned from the ground up. After the sensor redesign, standard sized keys were reasonably sensitive, larger keys more sensitive, smaller keys less sensitive and between keys, almost no response. In other words, the system was capable of recognizing accurate key presses, but challenged by inaccurate ones. With Touch Cover’s compromised keyboard pitch, subtle key cap emboss, zero key travel, lack of mechanical feedback, and poor signal response between keys, type-o’s were just too frequent whether because of a missed character, a wrong character, or repeated characters. The design was far from mature and fostered little confidence.

Too often product design holds on tight to an already invested path, hoping the experience will be good enough when the reality says, it’s likely not. In these moments the best way to help your engineers is to dive into the details and understand the architecture its implementation and the tools they use to problem solve side by side. With technical knowledge, I was able to communicate much more effectively, partnering directly to solve the toughest problems. It was crucial to be strategic, focusing on the long lead items first. Usually this is the hardware and for the Touch Cover, it was the keyboard sensor layout.

Clear recommendations for keyboard ergonomics exist in human factors research and industry standards, but sometimes you need more to influence a team whose heads are down, with little time to make changes, and consciously bending the rules for innovation. To convince the engineers quickly, the changes had to be specific and the reasons clear. Partnered with research, one of the most valuable things we did was to have touch-typists with inked fingertips, type on printed keyboard layouts. Visualizing key strike locations coupled with qualitative and quantitative performance data we were able to steer the design back to a standard key pitch. To further improve typing performance, I advocated for smaller gaps between sensors, improved sensor uniformity and for taller sensors under the spacebar and down arrow to ensure signal response for even the sloppiest key presses. Every intentional key strike had to produce something.

Constrained by our desire for a 3mm thin product, the industrial designer, engineers and I explored fabric properties, emboss designs and the embossing process, tweaking pressure, temperature, and duration to acentuate keycap geometry all for better key cap feel. With emboss depths less than 0.3mm, distances between emboss edges and the crispness of those edges were critical. Familiar dished keycaps were less feasible, recessed keycaps possible, but achieving a consistent aesthetic was not within the process. Our exploration led to the raised keycap and homing detail. A tough compromise which inspired me to later lead efforts to prototype innovative feedback solutions for thin form factors.

These hardware improvements were important but not enough. Firmware needed to more accurately and reliably recognize intent. With traditional keyboards, there’s no intelligence to key press determination. If a key is pushed, the key is pushed. No disambiguation, no questions. Interestingly, people are more willing to accept their own typing errors with traditional keyboards, especially if the ergonomics are comfortable. For Touch Covers though, expectations were different. Instead of assuming responsibility for type-o’s, people attributed them to the keyboard. The Touch Cover firmware had to make better decisions.

The product team’s test tools were crude at this point. I had a command line interface which enabled me to log any individual key’s signal for 5 seconds. That’s it. This method prohibited the understanding of natural behavior but made clear the system’s strengths and weaknesses. Through logs I visualized the signal response and highlighted the need for filtering nearly simultaneous keystrokes to improve the sloppy typist’s expeience (essentially all of us). To improve the typing experience, I advocated one strike must yield one character (even if it was off target). I recognized the design was making decisions faster than people could type so I recommended limits for how quickly the same key as well as different keys could be successively pressed (based on hand function research from Martin et al 1996, Terzuolo & Viviani 1980, van Galen & Wing 1984, Salthouse 1984, and Larochelle 1984).

This recommendation meant “chording” QWERTY keys would not be possible. It also meant fast typists (who roll their fingers) may experience dropped characters. We needed better tools to solve these tougher challenges, so I drove specific requirements into test tool development to help us capture natural typing behavior, replay and scrub it, as well as visualize the recorded signal response in several ways.

With improved test tools, I was able to propose critical design changes based on real data. Characterizing typical key presses informed key press classification algorithms, helping the system recognize only what’s humanly possible. I was also able to suggest a method for isolating the intended key through event timeline analysis. These improvements significantly reduced typing anomalies and helped typing speeds exceed those achievable with the onscreen keyboard. The first generation Touch Cover was now good enough to use (if you were brave enough to step outside your comfort zone and stick with it). All of these improvements were significant, but a more optimal typing experience came with the next generation Touch Cover.

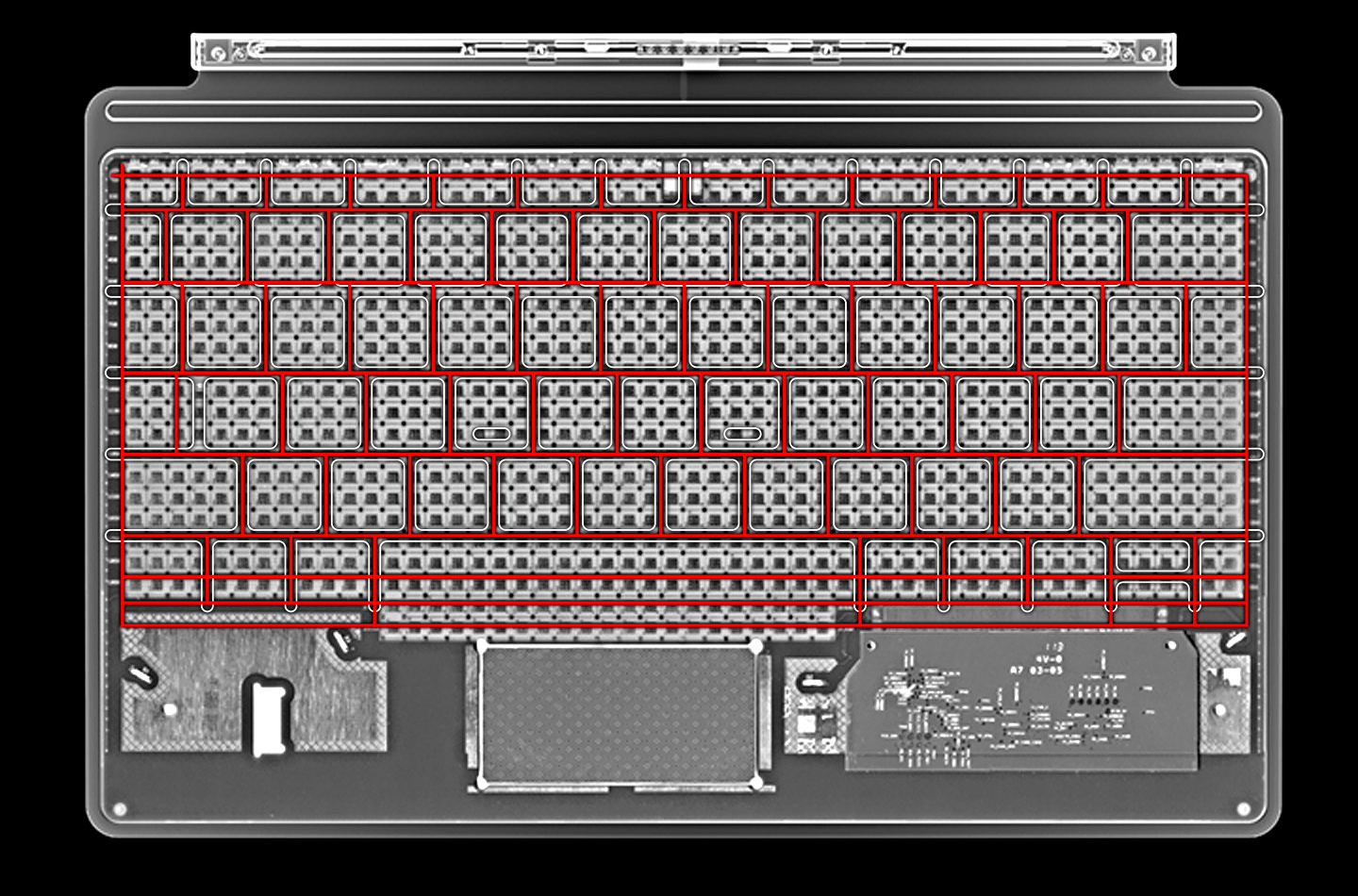

While involved with Touch Cover 1 development, the Applied Science group continued to incubate the original architecture for Touch Cover 2. The shift from one sensor per key design to a dense sensor array enabled sub-millimeter touch precision. In other words, the design no longer had to guess where your finger was. Instead, I evangelized to tweak digitally interpretted key geometry, further improving typing accuracy.

Touch Cover 1 sensor layout

Touch Cover 2 sensor layout

With each hardware iteration, machine learning was used to tune the firmware, analyzing large sets of typing and resting pressure data. The process streamlined optimizing algorithms and ensured the typing experience was tuned for a wider range of typical intentional and unintentional behavior. These efficiencies allowed me to focus on defining the keyset gesture interaction model and backlighting experience.

At the time there were several perspectives across the company forming around keyset gestures – some from the Windows input team, some from Microsoft Research, some from Applied Sciences and some from the work I had driven on previous keyboard incubations. As I considered each gesture, it had to be reconciled with the bigger picture. This was a paradigm shift and establishing a solid interaction model once which could scale to smaller devices and make sense within the overall Windows 8 experience was important.

The gestures which resonated most were those pertinent to typing. Whether reaching for the backspace key, touchpad, mouse or screen, changing posture seemed inefficient. Reducing these inefficiencies I believed was important. Part of the solution was auto-correction and with Windows investing in this space, I drove for external keyboard support. In collaboration with Windows I also co-designed a gesture that interacts with suggested words while you type. Swipe your thumb left or right on the spacebar to select a word and then tap to insert it.

Controlling your insertion point (like you could your pointer) I believed would be valuable and would help minimize hand posture changes. Tapping on screen between letters was difficult and using the touchpad or arrow keys (while a more precise method), was always a longer interaction. A gesture at your fingertips was more efficient, so I proposed sliding two fingers across keys in any direction to move your insertion point.

While these gestures were interesting and brought new value, integrating them was not a simple matter. To support gestures, latency long enough to determine intent was necessary. Adding it to every key press was not an option so the Applied Science group and I developed a solution that throttled latency based on user behavior. Note, any design which attempts to anticipate user intent is risky business. To mitigate risk weighting the design conservatively meant typing would be more important than gestures. Gestures became the “Easter egg” fo those that discovered them – because they were never evangelized. They simply were not ready to be. A tough realization, but the right decision for the product.

The desired backlighting aesthetic was also challenging to achieve – not because of function, but because of form. Several iterations of electrical, mechanical and fabric design / process were necessary. Light uniformity and sufficient legend contrast were critical to get right for common and less common lighting conditions. Contrast ratios of 5:1 or more (between legend and keycap color) were ideal and ratios as low as 3:1 were sufficient for most places people would go. I found that even a well designed font was often insufficient for backlit legends. Thin, small characters next to larger, thicker ones were perceived differently (even when their actual brightness was the same). Pushing font weight and size without adversely affecting typography, improved “perceived” uniformity. This process was more art than science.

Establishing the default brightness involved understanding battery impact and user preference. Fortunately, the design was efficient and its affect on battery life was nominal. To determine preference, I drove the development of test APIs which allowed us to change brightness on the fly. With these tools, I enabled us to research and evaluate user preference under various lighting conditions and transitions. What I learned was that perception actually varies with the abruptness of the transition. With gradual transitions, our eyes have time to adjust to brighter default backlighting settings, but with abrupt transitions, a brighter setting will bother us more (even after our eyes adjust to the context). A dim backlighting brightness was actually preferred and sufficient for low light conditions. It also boosted legend contrast under dim light conditions.

The most elegant solution I knew would be one that delivered backlighting when you need it and not when you didn’t. If backlighting was on in a well lit room or when your hands weren’t near, it was just wasting battery life. The challenge was to design a system that could consistently make the right assumptions and disappear into the background. After prototyping with proximity and ambient light sensors, presence detection through proximity seemed most appropriate. Ambient light sensors were just too susceptible to temporal sources of shadow and light, whether caused by changes in posture, reflection or other fleeting conditions.

I was driving for an interaction that felt human. When you smile at someone, the sooner they smile back, the more delight you feel. As hands approach a keyboard, backlighting which subtly greets you, makes you feel just like when a smile is reciprocated. The keyboard is acknowledging you. It is telling you it’s ready and waiting patiently as you think about what to say, aware that you are still there by remaining backlit. When you are done it just knows and quietly says goodbye, fading out slowly. This practically human interaction was the delight I was after. It simply changes your relationship with the device.

For third generation Surface Covers, presence detection was cut to save a few cents. The final solution was ultimately less ideal and obviously not my preference …wink.